By Olusegun Oruame

Nigeria’s Federal House of Representatives has started work on the #AI Bill:“Control of Usage of #ArtificialIntelligence Technology in Nigeria Bill, 2023 (HB.942)”to enable a codified legal framework for the adoption and use of systems adjudged as artificial intelligence (AI).

RELATED: Biden issues Executive Order on safe, secure, and trustworthy artificial intelligence

Sponsored by Honourable Sada Soli, the Bill has undergone a first reading (Wednesday, 22 November, 2023) setting the tone for a formal statute on AI in Nigeria and to bring to the fore, the debate on the necessity of creating a regulatory landscape over AI technologies and whether indeed, government should regulate AI.

National AI Policy

The bill is coming just as the National Information Technology Development Agency (NITDA) gears up plans at framing the country’s first National AI Policy to provide the roadmap for Nigeria’s AI aspiration for sustainable development, boosting national productivity, building of high-end skillsets, fostering innovation and ultimately, improving the lives of the citizens.

NITDA is pushing for policy intervention in AI believing AI could benefit the growth of the digital economy in a transparent and broadminded manner once there are clear-cut guidelines.

But even in the absence of clear policy thrust on AI, Nigeria commissioned in 2020, the National Centre for Artificial Intelligence and Robotics (NCAIR) as one of “NITDA’s special purpose vehicles created to promote research and development on emerging technologies and their practical application in areas of Nigerian national interest.”

Nigeria’s pathway to AI is anchored on its 2020 establishment of the NCAIR, a somewhat novel initiative on the continent but in terms of AI policy making, Nigeria is considered to be behind Morocco, South Africa, and Tunisia.

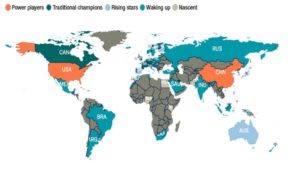

According to Diplo, “generally speaking, Africa has been slow in the uptake of artificial intelligence (AI) technologies, for a variety of reasons, from infrastructure challenges to limited financial resources. The Global AI Index, for instance, places the African countries it analyses among ‘waking up’ and ‘nascent’ nations in terms of AI investment, innovation, and implementation: Egypt, Nigeria, and Kenya are nascent, while Morocco, South Africa, and Tunisia are waking up (See image below).

AI, that uncanny and dizzying ability of a “digital computer or computer-controlled robot to perform tasks commonly associated with intelligent beings,” is no longer just a common phenomenon but becoming an intricate fabric of 21st century existence whether in politics, governance, public and war administration; economic management and enterprise sustainability across all sectors. AI is in the air. We are either already breathing it or will breathe it.

Anxieties over AI

For good reasons, global stakeholders are concerned that an unregulated AI could spell doom for humanity. Their worries cannot be dismissed.

Techprenuer, Elon Musk favours AI regulation because he believes “there is a real danger for digital superintelligence having negative consequences.” Musk leads a group of other global tech investors to push for “clear guidelines and policies for using AI ethically and safely” and provide for scrutiny of “who’s responsible” for AI across boards.

In Nigeria, both the Speaker of the House of Representatives, Tajudeen Abbas and the Deputy Speaker, Benjamin Okezie Kalu, expressed similar conviction that governments have a responsibility to regulate AI and ensure progressive use of the technology.

Hear Abbas at a recent public function: “Despite the opportunities of AI, there are risks involved. “Datasets and algorithms can reflect or reinforce gender, racial, or ideological biases. More critically, AI can deepen inequalities by automating routine tasks and displacing jobs. There is also likely to be a rise in identity theft and fraud, as evidenced by the use of AI to create highly realistic deep fakes.

“These are intended to misinform, trick and confuse people. Attackers use these maliciously crafted videos, photos and audio to create societal unrest, carry out fraud and damage the reputations of individuals and brands.

Kalu shares his worries in a recent interaction with Meta’s team in Abuja. His words: “[AI] could be abused. We value industry input. We value collaborations and we look forward to engaging with stakeholders like you to ensure that our own AI strategy aligns fully with global best practices, and serves the interests of both technology companies and the public. It is not all AI that would be healthy for our economy but there are many of them when they are adopted and adapted to suit our unique needs will contribute to our national development.”

Also, Andile Ngcaba, Group Chairman of inq Group, a tech company in Lagos, is canvassing for AI regulation by Nigerian government. Ngcaba, a private entrepreneur, argues that it is government’s business to regulate the adoption and administration of AI.

But Abdul-Hakeem Ajijola (AhA), a global Cybersecurity resource and the Chair of the African Union Cyber Security Expert Group fears that legislators “might restrict local development options and narrow indigenous opportunities.” He wants more stakeholders’ engagements to address some of industry concerns.

Managing AI worries with laws and regulations

An unguided AI could reinforce prejudices and falsehoods. “One of the core arguments favoring AI regulations is that it is known to give biased results based on the inherent biases in the data it is trained on. There have been several instances of racial and gender biases finding their way into AI outcomes. We can argue that humans also have biases.”

As a recent report by the Associated Press shows: “Pictures from the Israel-Hamas war have vividly and painfully illustrated AI’s potential as a propaganda tool, used to create lifelike images of carnage. Since the war began last month, digitally altered ones spread on social media have been used to make false claims about responsibility for casualties or to deceive people about atrocities that never happened.”

Josephine Uba, legal practitioner at Olisa Agbakoba Legal (OAL) notes that the “emergence of AI poses significant challenges to the rule of law, fundamental rights protection, and the integrity of Nigeria’s judicial system. The implications become even more profound when considering the potential incorporation of AI-based decision-making tools within the realms of justice and law enforcement.”

Also for consideration is the viewpoint that “beyond the “basics”, regulation needs to protect populations at large from AI-related safety risks, of which there are many. Most will be human-related risks. Malicious actors can use Generative AI to spread misinformation or create deepfakes.”

The world takes steps to regulate AI

In the United States, President Joe Biden recently issued a landmark Executive Order to ensure that America leads the way in seizing “the promise and managing the risks of artificial intelligence (AI). The Executive Order establishes new standards for AI safety and security, protects Americans’ privacy, advances equity and civil rights, stands up for consumers and workers, promotes innovation and competition, advances American leadership around the world, and more.”

Most authorities are agreed “data science and artificial intelligence legislation began with the General Data Protection Regulation (GDPR) in 2018. As is evident from the name, this act in the European Union is not only about AI, but it does have a clause that describes the ‘right to explanation’ for the impact of artificial intelligence. 2021’s AI Act legislated in the European Union was more to the point. It classified AI systems into three categories:

- Systems that create an unacceptable amount of risk must be banned

- Systems that can be considered high-risk need to be regulated

- Safe applications, which can be left unregulated”

In Canada, the 2022 Artificial Intelligence and Data Act (AIDA) regulates all “companies using AI with a modified risk-based approach”. In India, the approach is somewhat different to the “growth and proliferation of AI. While the government is keen to regulate generative AI platforms like ChatGPT and Bard, there is no plan for a codified law to curb the growth of AI.”

Africa takes steps for AI regulation

Africa is also gradually responding to the AI-realities. There are now over 2400 AI organisations on the continent to signify the rising interest in AI.

The DiploFoundation observed that “AI is discussed in African countries in the context of public sector reform, education and research, national competitiveness, and partnerships with tech companies. Countries with the relevant capacities focus on skills, talent, and capacity development to build local and regional expertise.”

Diplo further reports that “Three African countries have made efforts to advance policy documents dedicated specifically to AI: Mauritius, Egypt, and Kenya. Mauritius’s AI strategy, published in 2018, describes AI and other emerging technologies as having the potential to address, in part, the country’s social and financial issues and as ‘an important vector of revival of the traditional sectors of the economy as well as for creating a new pillar for the development of our nation in the next decade and beyond’.

“Egypt has a national AI strategy (2021) built around a two-fold vision: exploiting AI technologies to support the achievement of SDGs, and establishing Egypt both as a key actor in facilitating regional cooperation on AI and as an active international player. The strategy focuses on four pillars: AI for government, AI for development, capacity building, and international activities. Egypt’s goal to foster bilateral, regional, and international cooperation on AI is to be achieved through activities such as active participation in relevant international initiatives and forums, launching regional initiatives to unify voices and promote cooperation, promoting AI for development as a priority across regional and international forums, and initiating projects with partner countries.

“Kenya’s government started exploring the potential of AI in 2018 when it created the Distributed Ledgers Technology and AI Task Force to develop a roadmap for how the country can take full advantage of these technologies. The report the task force published in 2019 notes that AI and other frontier technologies can increase national competitiveness and accelerate the rate of innovation, ‘propelling the country forward and positioning [it] as a regional and international leader in the ICT domain’.

“Ethiopia, Ghana, Morocco, Rwanda, South Africa, Tunisia, and Uganda are also taking steps towards defining AI policies. Ghana and Uganda have been part of the Ethical Policy Frameworks for Artificial Intelligence in the Global South, a pilot project conducted in 2019 by UN Global Pulse and the German Federal Ministry for Economic Cooperation and Development, and dedicated to supporting the development of local policy frameworks for AI.”